… Asked the brain about itself. Typical narcissistic brain behavior, don’t see the other organs doing it.

My bowels have been questioning a lot lately, so it’s not entirely without precedent.

Yea, what about your liver? Have you asked how it feels today?

The liver got the toughest job out there. He got no time for himself. Poor liver.

If all else fails, a kick in the nuts is a reality check

Basically, yes. Our eyes capture the light that goes into them at 24 frames per second (please correct me if I goofed on that) and the image is upside down.

Our brains turn those images upright, and it also fills in the blanks. The brain basically guesses what’s going on between the frames. It’s highly adapt at pattern recognition and estimation.

My favorite example of this is our nose. Look at you nose. You can look down and see it a little, and you can close one eye and see more of it. It’s right there in the bottom center of our view, but you don’t see it at all everyday.

That’s because it’s always there, and your brain filters it out. The pattern of our nose being there doesn’t change, so your brain just ignores it unless you want to intentionally see it. You can extrapolate that to everything else. Most things the brain expects to see, and does see through our eyes, is kind of ignored. It’s there, but it’s not as important as say, anything that’s moving.

Also, and this is fun to think about, we don’t even see everything. The color spectrum is far wider than what our eyes can recognize. There are animals, sea life and insects that can see much much more than we can.

But to answer more directly, you are right, the brain does crazy heavy lifting for all of our senses, not just sight. Our reality is confined to what our bodies can decifer from the world through our five senses.

We definitely are seeing things faster than 24 Hz, or we wouldn’t be able to tell a difference in refresh rates above that.

Edit: I don’t think we have a digital, on-off refresh rate of our vision, so fps doesn’t exactly apply. Our brain does turn the ongoing stream of sensory data from our eyes into our vision “video”, but compared to digital screen refresh rates, we can definitely tell a difference between 24 and say 60 fps.

Yeah it’s not like frames from a projector. It’s a stream. But the brain skips parts that haven’t changed.

People looking at a strobing light, start to see it as just “on” (not blinking anymore) at almost exactly 60Hz.

In double blind tests, pro gamers can’t reliably tell 90fps from 120.

There is however, an unconscious improvement to reaction time, all the way up to 240fps. Maybe faster.It seems to be more complicated than that

However, when the modulated light source contains a spatial high frequency edge, all viewers saw flicker artifacts over 200 Hz and several viewers reported visibility of flicker artifacts at over 800 Hz. For the median viewer, flicker artifacts disappear only over 500 Hz, many times the commonly reported flicker fusion rate.

The real benefit of super high refresh rates is the decrease in latency for input. At lower rates the lag between input and the next frame is extremely apparent, above about ~144hz it’s much less noticable.

The other side effect of running at high fps is that when heavy processing occurs and there are frame time lags they’re much less noticable because the minimum fps is still very high. I usually tell people not to pay attention to the maximum fps rather look at the average and min.

I think having higher frame rates isn’t necessarily about whether our eyes can perceive the frame or not. As another commenter pointed out there’s latency benefits, but also, the frame rate affects how things smear and ghost as you move them around quickly. I don’t just mean in gaming. Actually, it’s more obvious when you’re just reading an article or writing something in Word. If you scroll quickly, the words blur and jitter more at low frame rates, and this is absolutely something you can notice. You might not be able to tell the frametime, but you can notice that a word is here one moment and next thing you know, it teleported 1 cm off

I think i read that fighter pilots need to be able to identify a plane in one frame at 300 fps, and that the theoretical limit of the eye is 1000+ fps.

Though, whether the brain can manage to process the data at 1000+ fps is questionable.

I’m using part of this comment to inform my monitor purchases for the rest of my life.

New 1,200 Hz displays? Well, it did say 1,000-plus…

Both of these claims are kinda misguided. The brain is able to detect very short flashes of light (say, 1 thousandth of a second), and other major changes in light perception. Especially an increase in light will be registered near instantly. However, since it doesn’t have a set frame rate, more minor changes in the light perception (say, 100 fps) are not going to be registered. And the brain does try to actively correct discontinuities, that’s why even 12 fps animation feels like movement, although a bit choppy.

I would believe it if someone told me that an individual rod or cone in the eye was 24fps but they’re most likely not synched up

You’re right that is a continuous process, so there’s no frame rate as such. 24 fps is just the lowest framerate that doesn’t look “framey” in videos, but can go as low as 12 and still reliably perceive it as movement, which is why most stop motion films are done at 12fps.

The amount of motion blur we see on fast moving objects is similar to a 24fps camera with a normal shutter angle setting, but we don’t perceive any blur when we turn our heads or move our eyes like a camera at that fps does.

There’s also our reaction time, which can vary a lot depending on a plethora of factors, but averages around 250ms, which is similar to 4fps 😅… Or maybe since it’s a continuous process it’s more like ♾️fps but with a 250ms delay 🤔😅

Generally humans don’t perceive a difference above 60 Hz.

Completely untrue and not even up for debate. You’d know this if you had ever used a high-refresh rate display.

Let me guess, you’ve also bought a Gamer Chair to go with your overpriced 144 Hz display.

So, have you actually used a 144 Hz display yourself?

Yes, I have observed a complete lack of improvement.

So what you meant to say is that you don’t see a difference above 60 Hz. But other people definitely can tell the difference. Don’t generalize on everyone based on your own experiences.

It’s noticeable, it’s just not massive. My phone screen runs at 120hz but I don’t notice a difference unless I’m scrolling rapidly. Gaming culture (driven by corporations) really overemphasizes its importance. Gamers as a group seem to be easily duped by impressive sounding numbers, just like the rest of the population.

Also keep in mind there likely isn’t a lot of selective pressure on biological vision refresh rate, so I wouldn’t be surprised if there’s a fair amount of variability in the ability to discern a difference amongst individuals.

Finally, a reasonable reply to my comment.

It’s a hot button topic for some people. I’m for the biological variation explanation - some people seem to really notice a difference while others don’t.

I think what the people who get really upset notice is that they dropped a few extra $100s on what’s often largely a marketing gimmick.

the 24 fps thing is one hella myth. our cones and rods send a continuous stream of information, which is blended with past-received information in our perception to remove stuff like the movement from darting your eyes around.

24fps vision is a lie told by Hollywood so they can save on film

It’s the lowest fps they can go without it being horrid, really.

It is horrid. I get nauseous whenever a low framerate video has any significant motion

Cloverfield needs a 420 fps remaster.

Also, your eyes dart around and you only see a little patch. You blink. Your brain makes up a nice stable image of the world, mostly consisting of things that your brain think should be there.

If you want a fun experiment of all the things we see but don’t actually process, I recommend the game series I’m On Observation Duty. You flip through a series of security cameras and identify when something changed. It’s incredible when you realize the entire floor of a room changed or a giant thing went missing, and you just tuned it out because your brain never felt a need to take in that detail.

It’s sorta horror genre and I hate pretty much every other horror thing, but I love those games because they make me think about how I think.

Ha, I’ve heard of that one so I caught it. I missed 3 of the passes, though!

That sounds pretty interesting. Thanks

The 24fps thing sounds wrong. That’s not even a cinematic 30.

I find myself often wondering what colors look like to other people because there is no way to know for sure that what I see as red looks the same to everyone else. It’s just a frequency of light. How the brain interprets that is anybody’s guess. I can’t describe the difference of red vs blue and I’ve never met anyone else who could either. Maybe what I see as red is actually what I see as blue to someone else.

Apart from the philosophical aspect which is unanswerable, I feel certain we see equivalent colors. This is an interesting article about it. https://en.wikipedia.org/wiki/CIE_1931_color_space Scientists found what the three primary colors our eyes see are. Because of the overlap in cone activation they’re actually imaginary colors that don’t exist.

What the eyes do when receiving information isn’t the focus, it’s how those signals they send to the brain are interpreted is where the uncertainty comes from. Everyone will have the same data. How the brain renders it in our mind may not be.

I’m red-green colourblind(Deuteranopia) and often think this exact thing, how the reality I perceive is different from others purely due to this.

I can’t describe the difference of red vs blue and I’ve never met anyone else who could either.

In the Mask movie there is a great scene where he demonstrates colors to his blind girlfriend. They did a really great job with it.

I’ve had this thought many times, glad I’m not alone. Also makes you wonder if possibly everyone’s “favorite” color is the same color, we just all call it different things because of how we individually perceive it.

This is a fun thought, but I can disprove this myself easily enough due to having had my favorite color change multiple times in my lifetime. Currently enjoying green.

We are the same. Right down to current favorite color. 😃

Well, it really makes sense for these every specifically tuned biological machines to all function more or less the same way.

Everything we can glean from neurology pretty much says our perceptions are similar, we just process them differently.

Red is a shorter wavelength than blue. It would make no sense for the brain to interpret long wavelengths as short or short as long, which is probably why our colour perceptions are more or less the same.

Language affects our perception more than the biological hardware we have. The physical sensations are similar to everyone, but processing them is different. Which is why it could still be that your red isn’t my red. But my point is I don’t think it’d ever be blue or green in any context. It’d je different, perhaps, but not fundamentally so.

The ancient Greeks used to call the sky bronze. Related, there was this cool short the other day. Talked about how someone raised their kid normally other than carefully making sure never to say what colour the sky is, and then later inquiring about it. The girl had trouble at first, but calling it some mix of white and blue. The point in that was that kids learn colours somehow related to other objects. And the sky, as “an object”, is a very different category and was thus weird for her to assign a colour to.

Unrelated rant over

Scientific research indicates we see colors pretty damn similarly, with edge cases for colorblindness and also people who are more color sensitive.

One way this can be studied is by studying the metamerism of different colors by different observers. Metamerism is the study of how colors change given different light sources.

There are other objective qualities that give hints that we have similar ways of experiencing colors. You mention that colors are nothing more than our brain assigning “color” to frequency of light – but light is itself just a frequency of electromagnetic radiation, namely the frequencies that make up the bulk of the radiation emitted by the sun.

So to a normal observer without colorblindness, there are more variants of colors of green than any other color. Green is of course situated in the very center of the roygbiv spectrum, it is the “most visible” color. The colors with the least amount of variations are red and violet, which are situated at the edges. Frequencies above violet or below red become invisible making up infrared and ultraviolet radiation.

Where we get tricked up, and I used to have identical suspicions as you did, is that we consider color to be purely subjective, because we aren’t taught to unify subjectivity and objectivity into a united whole. Color isn’t completely imagined, there are certain surfaces that absorb and reflect certain frequencies of EM radiation just as the structures in our brain that process this ocular input are more or less similar. Things that are subjective aren’t usually associated with being “real” the same way that objectively “real” things that exist out in the phenomenal world are. However, color is socially real, we can almost all identify colors that are the same and colors which are different. Since the set of colors which are “red” are fewer than the set of colors which are “green” then there is no way that what I experience as red is the same as what you experience as green. Artists use colors to convey emotion and are able to achieve this with many many different observers. Warm colors are warm, and cool colors are cool. There may be different levels of sensitivity but in my experience this can be somewhat trained into an observer though no doubt there are outliers who have a unique sensitivity to color differences.

So there are objective factors which align with subjective factors let’s say 90% of the time, which strongly supports the idea that we experience color more or less the same way. The trouble is not that subjectivity and objectivity are irreconcilable, in fact it is when we fail to reconcile them that our troubles begin. In my opinion, this is a huge problem that creates all kinds of issues when we try to relate to each other; it may be the most prominent philosophical problem of our age. Luckily it is fairly easily remedied with a slight change in the way we think about subject and object. Its useful to separate them sometimes but we need to be able to reunify them, which just takes practice in my experience.

I, too, think about this all the time.

Maybe what I see as red is actually what I see as blue to someone else.

This is a very common interesting thought, but what I’ve started thinking is even more interesting is this related thought:

Why does red look like it does, to you? I’m not concerned with how other people see red here, I’m just thinking about a single person (me or yourself, for instance). Why does red look like that? Why not differently? Something inside your eyes or your brain must be deciding that.

You could say “oh it’s because red is this and that wavelength” but what decides that exactly that wavelength looks like that (red)? There must be some physical process that at some point makes the qualia that is red - but how does it do that? The qualia that is red seems to be entirely arbitrary and decidedly not a physical thing. It is just a sensation, an experience, a qualia. But your eyes/brain somehow decides that ~650 nm wavelength translates to exactly that qualia. What decides that and how?

And even then, the same physical red can look darker, lighter, washed out, more vivid etc. depending on the surrounding colours. I mean, maybe what I see as white and gold, someone else sees as blue and black.

You can test this: https://ismy.blue/

Obviously not perfect since it depends on screens and lighting conditions.

One thing I find very interesting about how brains process reality is that there’s a disease that makes your eyes have blind spots. However people with that disease don’t see those blind spots because the brain fills the gaps with the information it knows to be there. So you could see a door closed just as it was when you last looked at it directly, but in the meantime someone opened the door and you’re still seeing the door closed until you look at it directly.

We all have blind spots because there’s a hole in the retina in the back of the eye for the optical nerve. The spots are located on the outer top side of our field of view and you can become aware of them with some visual tests online.

Another fun thing you can do is look at the sky (not the sun!) on a sunny day and start seeing your blood circulation and blind spot.

It sounds like Op is describing motion blindness.

I do not know how those people function.

The top commenter is correct. It’s why when you glance at a clock with a second hand, it can seem like it takes too long for it to move for the next second. It moved as you moved your eyes, and your brain didn’t make up the movement.

There’s a rare disease that turns peoples faces into demon faces called prosopometamorphopsia that can be partially relieved by observing things under different colored light.

I don’t think it’s the brain but rather our consciousness that is limited. Our sensory inputs are always on and processed by the brain, but our consciousness is very picky and also slow.

People can sometimes recall true memories that they weren’t aware of, or react to things they didn’t think of and such.

Consciousness is also somehow lagging behind the actual decision making, but always presents itself as the cause of action.

Sort of like Windows telling you that you removed a USB stick 2 seconds after you did it and was well aware of it happening. Consciousness is like that, except it takes responsibility for it too…

When it encounters something that it didn’t predict, it’ll tell you that “yeah this happened and this is why you did that”. Quite often the explanation for doing something is made up after it happened.

This is a good thing mostly, because it allows you to react faster than having to consider your options consciousnessly. You do not need to or have time to make a conscious decision to dodge a dodgeball, but you’ll still think you did.

NAILED IT! Yeah, our subconscious is driving and only sends an executive summary up top. And we think, “I did this!” Nah. You didn’t. You are just along for the ride.

People hate this notion because it negates free will. Well, yeah, it kinda does.

Everybody reading these comments and considering the implications needs to go read Blindsight by Peter Watts. It’s a first contact story set in the near-ish future, and really goes into consciousness and intelligence. Very thought provoking if you thought this comment chain was interesting.

You can train your subconscious! Well, at least influence it’s decisions. Videogames are a great example. Trained reaction/response. Repeated response to similar stimulus can create a trained subconscious response.

However, I have difficulty, especially now that I’m older, where subconscious and conscious will compete and I will lose acuity of what I actually did.

That and my memory is getting worse. :/

It is also possible to consciously alter the subconsciousness. For instance, by creating sensory input for yourself by saying things out loud to a mirror. Your ears will hear it, your eyes will see it, and your subconsciousness will then process it just the same as any other experience.

With enough repetition it will make a difference in which neurons are active whenever the brain comes to making a decision on that thing.

That assumes “you” are just the conscious part. If you accept the rest of your brain (and body) as part of “you”, then it’s a less dramatic divide.

When it encounters something that it didn’t predict, it’ll tell you that “yeah this happened and this is why you did that”. Quite often the explanation for doing something is made up after it happened.

There are interesting stories about tests done with split-brain patients, where the bridge connecting the left and right brain hemispheres, the corpus callosum, is severed. There are then ways to provide information to one hemisphere, have that hemisphere initiate an action, and then ask the other hemisphere why it did that. It will immediately make up a lie, even though we know that’s not the actual reason. Other than being consciouss, we’re not that different from ChatGPT. If the brain doesn’t know why something happened, it’ll make up a convincing explanation.

These tests seems interesting, where can i read about these test?

They’re very interesting, but also quite spooky as sometimes it seems to indicate there’s two different minds inside your head that are not aware of each other.

If you search for “split brain experiments” you should be able to find more.

This isn’t really what OP is talking about.

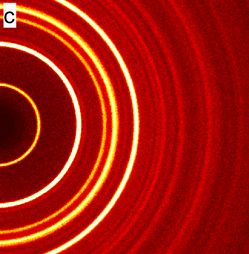

We really can’t see very well at all outside of the centre of our focus. this paper says 6 degrees, I heard this as a coin held at arms length.

Our minds “render” most of the rest of what we think we see.

You’re right that we discard most of our sensory inputs, but with visual inputs there’s much less data than it appears.

You’re right. OPs second question is more specifically about vision, while I answered more broadly.

Anyway, comparing it to data from a camera is not really possible.

Analoge vs. digital and so, but also in the way that we experience it.

The minds interpretation of vision is developed after birth. It takes several weeks before an infant can recognise anything and use the eyes for any purpose. Infants are probably blissfully experiencing meaningless raw sensory inputs before that. All the pattern recognition that is used to focus on things are learned features and so also dependent on actually learning them.

I can’t find the source for this story, but allegedly there was this missionary in Africa who came across a tribe who lived in the jungle and was used to being surrounded by dense forest their entire life. He took some of them to the savannah and showed them the open view. They then tried to grab the animals that were grassing miles away. They didn’t develop a sense of perspective for things in longer distance, because they’d never experienced it.

I don’t know if it’s true, but it makes a point. Some people are better at spotting things in motion or telling colours apart etc. than others. It matters how we use vision. Even in the moment. If I ask you to count all the red things in a room, you’ll see more red things that you were generally aware of. So the focus is not just the 6° angle or whatever. It’s what your brain is recognising for the pattern at mind.

So the idea of quantifying vision to megapixels and framerate is kind of useless in understanding both vision and the brain. It’s connected.

Same with sound. Some people have proved being able to use echo localisation similar to bats. You could test their vision blindfolded and they’d still make their way through a labyrinth or whatever.

Testing senses is difficult because the brain tends to compensate in that way. It’d need to be a very precise testing method to make any kind of quantisation for a particular sense.

Your brain is constantly processing the inputs from all of your senses and pretty much ignoring them if they fit with what it is already expecting.

Your brain is lazy. If everything seems to fit with what your brain expects then you believe that what you are seing is reality and you generally ignore it.

Generally the mind only focuses on what it believes is salient/interesting/unexpected.

Imagine if we had image sensors that could filter like that. Boom, video 100 times smaller in size. “Autonomous” surveillance cameras running on fractions of the power. Etc. Etc. Just far more efficient.

That’s actually how a lot of video codecs work, they just throw a key frame in every so often that has the full image so you can just do diffs for the rest of the frames till the next key frame.

We do have those things. That’s how many technologies already work.

Yes. We get hints of this now and then when digital TV breaks up and only the moving parts are updating until the next key frame arrives.

Here’s an interesting related factoid - your eyes are constantly making tiny micromovements called saccades. During these movements, you don’t receive any visual information. Your actual view of the world comes in stuttering fits and starts. You don’t notice this because your brain literally invents what you think you’re seeing during saccades. It’s good enough not to get you weeded out of the gene pool.

Yey your brain makes up an approximation of reality at best. It’s the weirdest fucking thing.

I heard a similar thing. But a bit more complicated. It wouldn’t be just the eyes, but all senses used by the brain to edit a filtered vision of reality.

And while the eyes take in everything they’re capable of, the brain only focuses on what it considers important. Which is probably false due to the many, many times one will search for something within their cone of vision, yet are unable to see it.

So while I’m not sure of the details, the brain can be thought of as choosy with what it shows.

You can stop time by looking at a clock hand briefly. It’s your brain filling in blanks.

https://www.popsci.com/science/why-do-clock-hands-seem-to-slow-down/

Why do you see a unified image when you open your eyes, even though each part of your visual cortex has access to only a small part of the world? What is special about the wrinkled outer layer of the brain, and what does that have to do with the way that you explore and come to understand the world? Are there new theories of how the brain operates? And in what ways is it doing something very different than current AI? Join Eagleman with guest Jeff Hawkins, theoretician and author of “A Thousand Brains” to dive into Hawkins’ theory of many models running in the brain at once.

And in what ways is it doing something very different than current AI?

That’s equal to the question “What differrentiates a screw from a car?”.

How do our brains process reality? Like this.

This one got me.

I mean all animals have brains to render reality, aka visual, audio, predator awareness. It’s not so special, most animals have tiny little brains.

Some animals, such as certain deep water crustaceans (Matis Shrimp) and cephalopods (Cuttlefish) can see more colors than most mammals, and their brains are often smaller.

And in the end “reality” is just excitations in quantum fields. And you “perceive” mostly electromagnetic forces.

The theory you’re referring to sounds like the free energy principle (or a variation of it).