I haven’t heard about thermodynamic computing before, but it sounds pretty interesting. As IEEE Spectrum explains , “the components of a thermodynamic chip begin in a semi-random state. A program is fed into the components, and once equilibrium is reached between these parts, the equilibrium is read out as the solution. This computation style only works with applications that involve a non-deterministic result … various AI tasks, such as AI image generation and other training tasks, thrive on this hardware.” It sounds almost like quantum computing to my layperson ears. [edit: fixed link]

In the early days of computing, people experimented with different signaling frameworks between electronic components (valves and later transistors). Decimal and ternary were used and abandoned because binary is much easier to implement and noise resistant.

What we do today is simulate non -deterministic (noisy) signals in LLM using deterministic (binary) signals, which is a massively inefficient way to do it.

I expect more chips with a neuron-like architecture will be coming out in the next few years. It would certainly be a benefit for the environment, as the cat will not get back in the bag

Hopefully I get to see one before getting cremated along with my entire family at the alligator Auschwitz.

I believe the implication is “eaten by alligators”

running them ovens is expensive …

Florida man at it again?

Sloppier compute architecture needed to drive down costs on sloppier method of computing.

When the results doesn’t matter use “Does not matter chips” They work 3% of the time 100% of the time. BUT they consume way less power! Great for any random statistics, if the results does not match what you want then just press again! Buy “Does not matter chips” now!

Slop has nothing to do with it. Some problems just aren’t deterministic and this sort of chip could be a massive performance and efficiency boost for them. They’re potentially useful for all sorts of real world simulations and detection problems.

If it makes AI cheaper then great because AI is a massive fucking waste of power, but other than that I am grossed out by this tech and want none of it.

up to 1000x energy consumption efficiency in these workloads

Seems like a win to me

This is it literally. (granted I’m sure there are other use cases, but you know they’re following those AI-dollars)

It makes some sense to handle self-discovered real numbers of infinite precision using analog methods, though I’m curious about how they handle noise, since in the real world and unlike the mathematical world all storage, transmission and calculations have some error.

That said, my experience way back with a project I did at Uni with Neural Networks in their early days, is that they’ll even make up for implementation bugs (we managed about 85% rate of number recognition with a buggy implementation) so maybe that kind of thing is quite robust in the face of analog error.

Man I would love to have access to chips like this.

Probabilistic computing would really benefit from this, I would invest in the company producing these.

Thermodynamic chips are a world apart from traditional computing — closer in practice to the realms of quantum and probabilistic computing. Where noise is the enemy of standard electronics, thermodynamic and probabilistic chips actively use noise to solve problems.

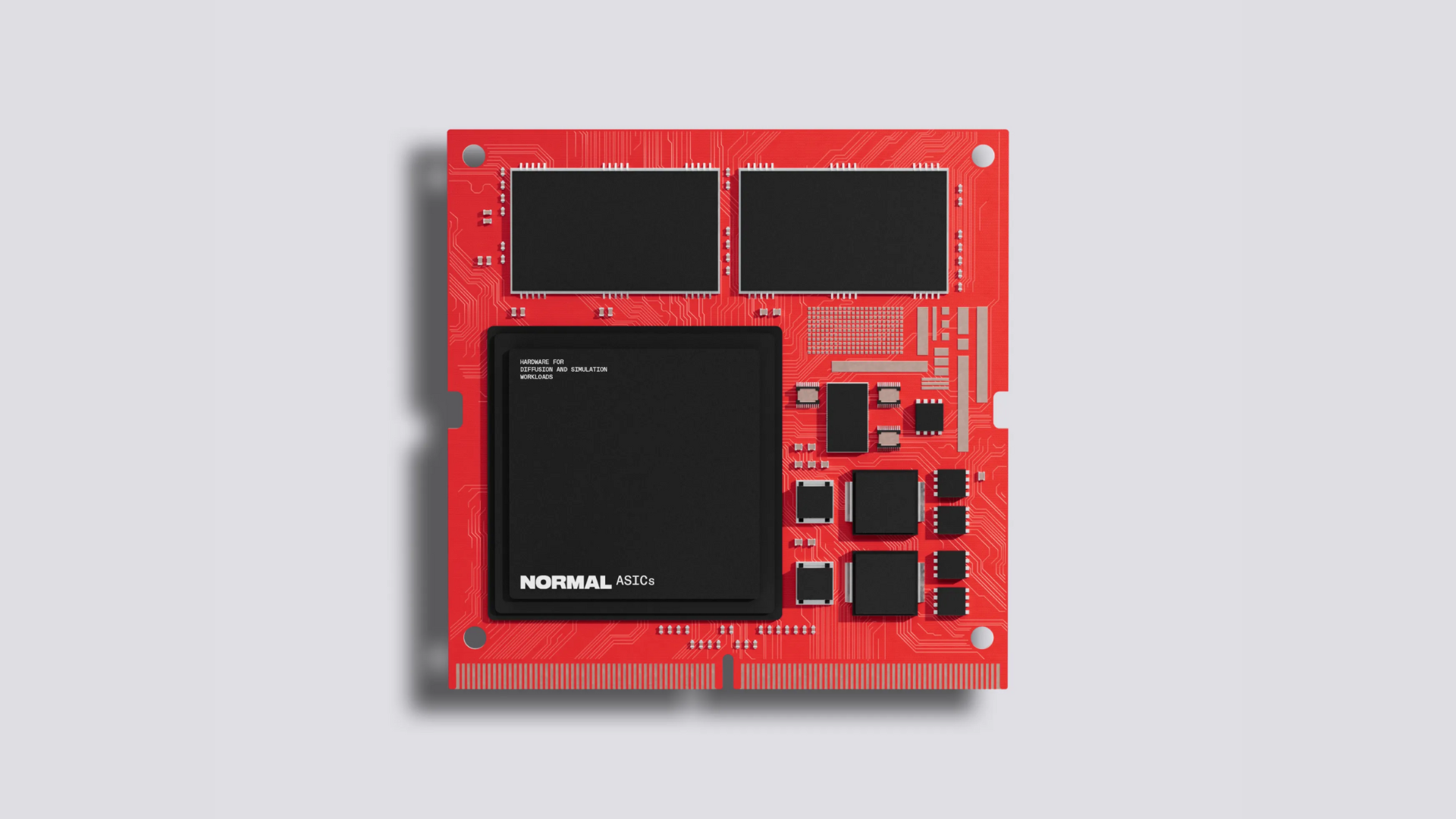

“We’re focusing on algorithms that are able to leverage noise, stochasticity, and nondeterminism,” said Zachary Belateche, silicon engineering lead at Normal Computing, in a recent interview with IEEE Spectrum. “That algorithm space turns out to be huge, everything from scientific computing to AI to linear algebra."Link in the description repeats http in a weird place and breaks it.

Here’s a working version: https://spectrum.ieee.org/thermodynamic-computing-normal-computing

thanks, fixed.

this is vx-adjacent i feel. really should set up a community for that.

Ohhhhhh they reached tape out.

Right. Right.

For non-deterministic solutions. For AI.

I mean, what’s not to love?

Lol, but there are lots of applications for nondeterministic computing that are not LLMs. Some of the famous-y ones would be like Monte Carlo Tree Search (MCTS), which is used in reinforcement learning (e.g., AlphaGo) to explore game trees probabilistically, Markov Chain Monte Carlo (MCMC), where you use randomness to sample from complex distributions (e.g., Bayesian inference), and zero-knowledge proofs, where you use randomness to verify information without revealing it. You could probably get an LLM to make a longer list :)

The EU already wants to implement the zero-knowledge proofs for age verification

Its likely also one of the best solutions if i understood it correctly because the EU would only know that one recieved a bunch of certificates(?) (single use) but not where it got used and the website would not get any personal dataZero-knowledge proofs are awsome, look them up

( ͡° ͜ʖ ͡°)=ε/̵͇̿̿/’̿’̿ ̿ ̿̿ ̿̿ ̿̿ (this is a threat but the weapon is not loaded)It does sound cool, though I’ve never heard of some of those things.

Monte Carlo methods are where you use randomness to simulate complex problems that are hard to model exactly.

As a simple case let’s say you didn’t konw the value of π, but you were able to generate random numbers really quickly. If you make a square of side d, draw a circle inside, and then randomly place points in the square, then you can calculate

π = percent in circle.A more complex use would be apply the same idea to things like modelling wild fires. If you could generate tons of plausible scenarios then you can determine the most likely routes a forest fire will take.

These methods are used a lot in epidemiology, nuclear physics, astrolonomy, etc.