So I'm trying to get unraid set up for the first time, I'm still running the free trial and assuming I can get this set up, I do plan on purchasing it but I'm starting to get frustrated and could use some help.

I previously had a drobo, but that company went under and I decided to switch to an unraid box because at least as far as I can tell, it's the only other NAS solution that will let me upgrade my array over time with mixed drive capacities.

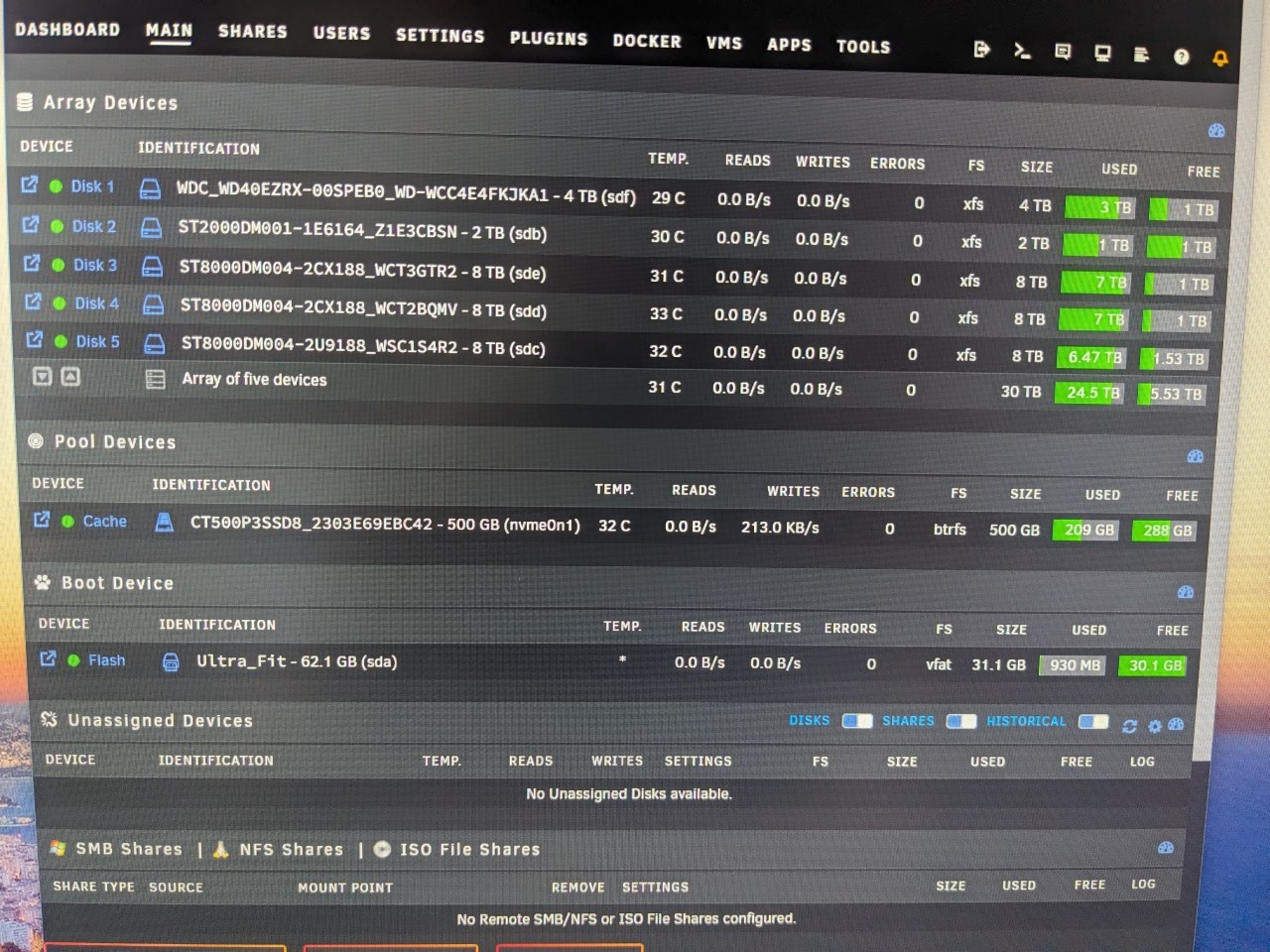

Initially everything was fine, I popped in all my empty drives, set up the array with 1x20Tb drive set up as parity, 1x20tb and 5x14TB drives set up as data disks and I started to move stuff over from the backups that I had from the previous Drobo NAS using the unassigned devices plugin and a USB 3 Hard drive dock.

Well, what had happened twice now is that randomly a device will go disabled with no indication of what's wrong. The blank drives are only about a year old and have before this shown absolutely no signs of failing. They are also still passing the SMART tests with no errors. The first time this happened it was the brand new 20 terabyte drive and the parity slot. I was able to resolve this by unassigning that drive starting the array and then stopping to reassign the drive. That's what a forum post. I was able to find suggested and that seemed to have worked, but it started a whole new parity sync That was estimated to take a whole week. The thing is I don't have a week to waste so I went ahead and started moving files back onto the system again, but now the same thing has happened to disc 3. One of the 14 terabyte drives.

I'm at my wits end, the first time it happened I couldn't figure it out. So I just wiped the array reinstalled on raid and try it again because I just couldn't figure it out. Are there any kind of common pitfalls than anyone could recommend me checking or anyone in general? Just willing to help?

My hardware:

Ryzen 7 5700G

64GB of 3200 ECC DDR4 (2x16gb is currently installed, but I've got another 2x16 that just arrived yesterday. I need to install that was just shipped late)

8 NAS bays in the front case

Blank Drives:

2x20TB, 5x14TB, 1x8TB

Drives with Data on them:

2x20TB, 1x10TB, 1x8TB (totaling around 40 TB of data across them)

Once the data is moved off of the drives that have data on them, I do intend to add them to the array. My NAS case has eight bays, and two internal SSDs that are separate from the NAS bays, One sata ssd set up as a cache, the other an NVME m.2 set up as a space for appdata.

As of last night before I went to bed I had about 3 terabytes of data moved onto the array, but during the overnight copy, something happened to my disc 3 which made the device marked as offline. I couldn't find any error messages informing me why the disk was offline but it was marked as offline.

The Parity drive was already invalid because I was copying data in while the parity sync was happening, and now I can't get the array to start at all.

I tried doing something that a forum post recommended which was to start the array with the disc unassigned in maintenance mode, then stop the array and then restart it with disc 3 Reassigned to the correct drive, but it refuses to do so. It tells me that there are too many wrong or missing disks.

The weirdest part is that I know that disc 3 still has the data on it because if I unassigned 3 and then mount that drive using the unassigned devices plugin I can see all the data is still browsable and there.

I'm starting to feel real dumb cuz I don't know what I'm doing wrong. I feel like there's got to be something simple that I'm doing wrong and I just can't figure out what it is.