AI/ML research has long been notorious for choosing bullshit benchmarks that make your approach look good, and then nobody ever uses it because it’s not actually that good in practice.

It’s totally possible that there will be legitimate NLP use-cases where this approach makes sense, but that is almost entirely separate from the current LLM craze. Also, transformer-based LLMs pretty much entirely supplanted recurrent networks as early as like 2018 in basically every NLP task. So even if the semiconductor industry massively reoriented to producing chips that support “MatMul-free” models like this one to even get an energy reduction, that would still mean that the model outputs would be even more garbage than they already are.

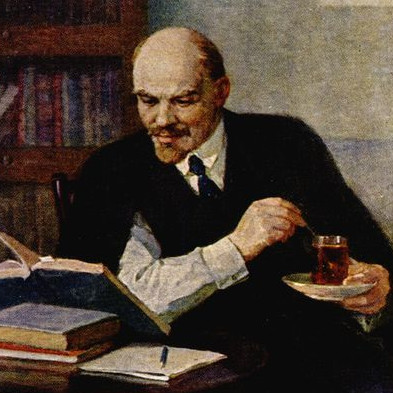

Well, yeah, but perhaps that implies they don’t know who the actual communists are, so they will still target the “commie” demonrats