Yeah, these are popping up all over the place - all from different users with newly created accounts and no other post/comment history. Most definitively sus.

geekwithsoul

I coalesce the vapors of human experience into a viable and meaningful comprehension.…

- 2 Posts

- 213 Comments

11·12 days ago

11·12 days agoIn addition to the point about Western mythologies dominating because of cultural exports, I think there is also the undercurrent of England’s original mythologies having been “lost” and so the English were always fascinated by the mythologies of the Norse (due to being invaded) and by the Greeks and Romans (as previous “great” civilizations they aspired to be).

Combine that with America’s obvious English influences and the influence of England as a colonizer around the world, and those mythologies gained a huge outsized influence.

1·17 days ago

1·17 days agoI probably didn’t explain well enough. Consuming media (books, TV, film, online content, and video games) is predominantly a passive experience. Obviously video games less so, but all in all, they only “adapt” within the guardrails of gameplay. These AI chatbots however are different in their very formlessness - they’re only programmed to maintain engagement and rely on the LLM training to maintain an illusion of “realness”. And because they were trained on all sorts of human interactions, they’re very good at that.

Humans are unique in how we continually anthropomorphize tons of not only inert, lifeless things (think of someone alternating between swearing at and pleading to a car that won’t start) but abstract ideals (even scientists often speak of evolution “choosing” specific traits). Given all of that, I don’t think it’s unreasonable to be worried about a teen with a still developing prefrontal cortex and who is in the midst of working on understanding social dynamics and peer relationships to embue an AI chatbot with far more “humanity” than is warranted. Humans seem to have an anthropomorphic bias in how we relate to the world - we are the primary yardstick we use to measure and relate everything around us, and things like AI chatbots exploit that to maximum effect. Hell, the whole reason the site mentioned in the article exists is that this approach is extraordinarily effective.

So while I understand that on a cursory look, someone objecting to it comes across as a sad example of yet another moral panic, I truly believe this is different. For one, we’ve never had access to such a lively psychological mirror before and it’s untested waters; and two, this isn’t some objection on some imagined slight against a “moral authority” but based in the scientific understanding of specifically teen brains and their demonstrated fragility in certain areas while still under development.

3·21 days ago

3·21 days agoSame! And if anyone disagrees, feel free to get in the comments! 😉

91·22 days ago

91·22 days agoYes, and it’s clear that they wouldn’t have done an outright temp ban for the length of time they did if you didn’t already have the history you did. Quit painting this as “They couldn’t handle me voicing my opinions” when it was entirely “This guy just won’t stop trolling”. No one really cares about your opinions, they cared about you trolling the community. You trying to make a martyr of yourself is ridiculous.

82·22 days ago

82·22 days agoI got a two-week ban in a community (for posting duplicate article from different sources)

Dude, you got it for that AND a history of trolling, even just after coming off a previous temp ban for trolling. Quit lying.

51·23 days ago

51·23 days agoI understand what you mean about the comparison between AI chatbots and video games (or whatever the moral panic du jour is), but I think they’re very much not the same. To a young teen, no matter how “immersive” the game is, it’s still just a game. They may rage against other players, they may become obsessed with playing, but as I said they’re still going to see it as a game.

An AI chatbot who is a troubled teen’s “best friend” is different and no matter how many warnings are slapped on the interface, it’s going to feel much more “real” to that kid than any game. They’re going to unload every ounce of angst into that thing, and by defaulting to “keep them engaged”, that chatbot is either going to ignore stuff it shouldn’t or encourage them in ways that it shouldn’t. It’s obvious there’s no real guardrails in this instance, as if he was talking about being suicidal, some red flags should’ve popped up.

Yes the parents shouldn’t have allowed him such unfettered access, yes they shouldn’t have had a loaded gun that he had access to, but a simple “This is all for funsies” warning on the interface isn’t enough to stop this from happening again. Some really troubled adults are using these things as defacto therapists and that’s bad too. But I’d be happier if lawmakers were much more worried about kids having access to this stuff than accessing “adult sites”.

14·23 days ago

14·23 days agoReally appreciate their coverage of pretty much everything - lots of detail, no fluff, and no over the top headlines.

And yep, fuck insurance. Helped make the entire point of US healthcare providing profit for big corps and not actually patient wellness.

5·23 days ago

5·23 days agoIt’s middle men all the way down…

61·23 days ago

61·23 days agoHad the same thought :)

That’s certainly where the term originated, but usage has expanded. I’m actually fine with it, as the original idea was about the pattern recognition we use when looking at faces, and I think there’s similar mechanisms for matching other “known” patterns we see. Probably with some sliding scale of emotional response on how well known the pattern is.

21·25 days ago

21·25 days agoI think we’re talking about different time periods. In the time I’m talking about, before AOL connected with Usenet, the number of high school kids on the actual internet could probably be measured in double digits. There were BBSes, which had their own wonderful culture, but they had trolls and villains in a way that Usenet did not.

It was higher than you think. While an outlier, realize WarGames came out in 1983. I grew up in the suburbs of DC, and by 1986, a number of us had modems and regularly dialed into local BBSes. Basically as soon as we got 2400 bits/s, it started to get more widespread. And honestly since we usually knew the admin running the BBS we dialed into, there were less serious trolling issues. But newsgroups were another matter - usually folks were pretty much anonymous and from all over, and while there could be a sense of community, there were healthy amounts of trolls. What you’re describing is the literal exact opposite of my own lived experience. Nothing wrong with that, and doesn’t mean either of us are wrong, just means different perspectives/experiences.

31·25 days ago

31·25 days agoI definitely don’t think this is true. That’s the whole “eternal September” thing.

I mean, it was my literal experience as a user. And it wasn’t just September, the first wave was June when high schoolers started summer break and spent considerable time online, and then the second wave in September with college kids. Honestly the second wave wasn’t as bad, as the college kids were using their university’s connection and they usually had some idea that if they went too far there might be consequences. Whereas the summer break latchkey high school kids were never that worried about any consequences.

I’m mostly talking about the volunteer internet. I don’t have any active accounts on commercial social media, even for business things.

I know, but that’s part of my point. The things that make online places feel safe, welcoming, and worthwhile are the same regardless if volunteer or commercial. I absolutely loved 2007 - 2012 early Twitter - it actually felt like the best of my old BBS/Usenet days but with much better scope. But I haven’t regularly been on there since 2016-ish, and completely left Reddit in July of last year (despite having had an account since 2009). For me the volunteer and federated social media has the best shot at being a “good” place, but I don’t have a philosophical objections to seeing commercial social media become less horrible, and in terms of understood and agreed upon social contract, I think approaching both with the same attitude should be encouraged.

We don’t need the commercial social media to fail for us to succeed, we need to change how people think about how they participate in online spaces and how those spaces should be managed and by whom.

2·25 days ago

2·25 days agoIn that case, I think the whole question is moot. The umbrella term of thingamawidget is not both modular and versatile, but its constituent parts are individually. “The thingamawidget with versatile software and modular hardware is…” would then be the more accurate description.

Otherwise it’s like describing a brownie as wet and bitter because the egg is wet and the raw cocoa is bitter.

171·25 days ago

171·25 days agoThe old-school internet had a strong social contract. There are little remnants surviving, that seem hilarious and naive in the modern day, but for the most part the modern internet has been taken over by commercial villains to such an extreme degree that a lot of the norms that held it together during the golden age are just forgotten by now.

So, I’ve been online in some form or another since the late '80s - back in the old BBS, dial-up, and Usenet days. I think there’s actually different factors at play.

To start with, Usenet was often just as toxic as any current social media/forum site. The same percentage of trolls, bad actors, etc. That really hasn’t increased or decreased in my online lifetime. The only real difference was the absolute power wielded by a BBS or server admin, and that power was exercised capriciously for both good and bad. Because keeping these things up and running was a commitment, the people making the decisions were often the ones directly keeping servers online and modem banks up and running. Agree or disagree with the admins, you couldn’t deny they were creating spaces for the rest of us to interact.

Then we started to get the first web based news sites with a social aspect (Slashdot/Fark/Digg/etc). And generally there wasn’t just one person making decisions and if they wanted to make any money they had to not scare off advertisers, so that started making things different (again for good and for bad). It was teams of people keeping things going and moderation was often a separate job. Back in the day I remember on multiple occasions a moderator making one call and then a site owner overruling them. It was at this time the view on moderation really began to change.

Nowadays giant mega corps run the social media sites and manage the advertising themselves so they’re answerable to no one other than psychotic billionaires, faceless stockholders and executive tech bros with a lot of hubris. Moderation is often led by algorithmic detection and then maybe a human. Appeals often just disappear into a void. It has all become an unfeeling, uncaring technocracy where no one is held accountable other than an occasional user, and never the corporation, execs, or owners.

Like yourself, not sure how to fix it, but splitting the tech companies apart from their advertising divisions would be step one. Probably would be helpful to require social media companies to be standalone businesses. Would at least be easier to hold them accountable. And maybe require that they be operated as nonprofits? To help disincentivize the kind of behavior we’ve got now.

3·30 days ago

3·30 days agoJust imagine what happens when he loses to the chatbot

131·1 month ago

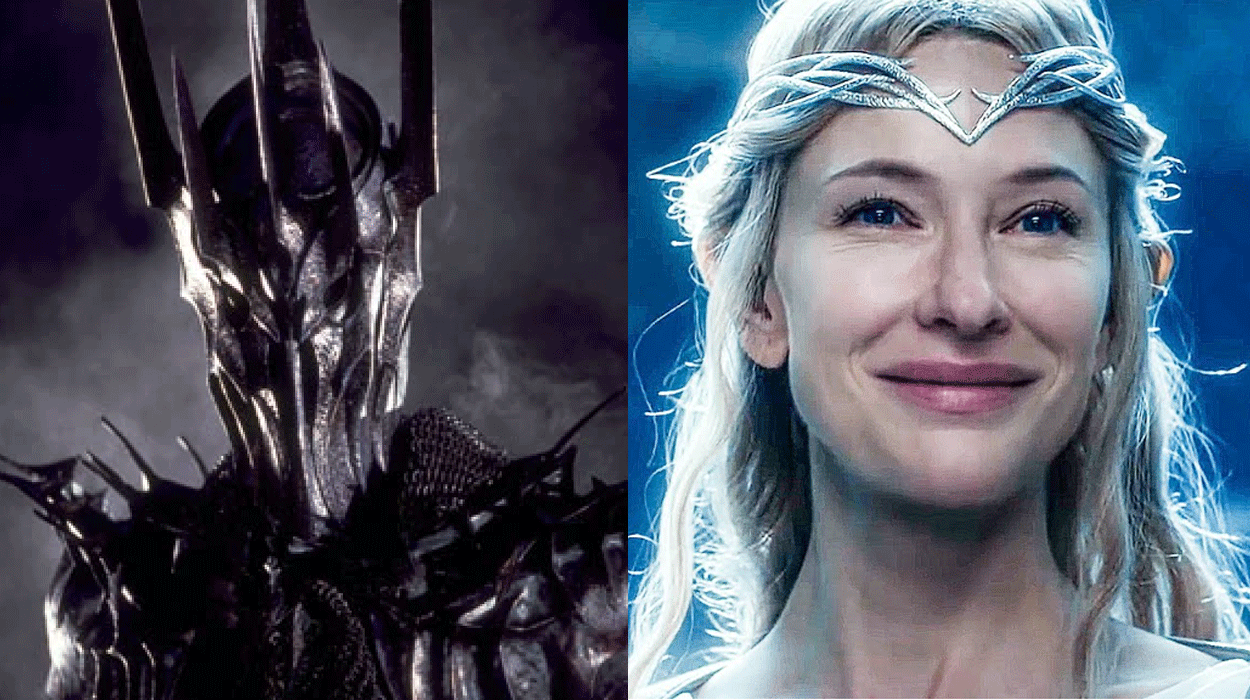

131·1 month agoYeah, I swear I just heard one of them say “Looks like Geek is back on the menu, boys!”

I didn’t even know orcs had restaurants? How do they know what a menu is?!

41·1 month ago

41·1 month agoOoh, tell me more! I used to love a good fairy tale. And this one sounds like a doozy! But just like you started, make sure it doesn’t have anything that matches reality - that’d be great. Thanks!

Wow, if they spending money on ballot curing, those internal Trump polls must have scared the crap out of them.