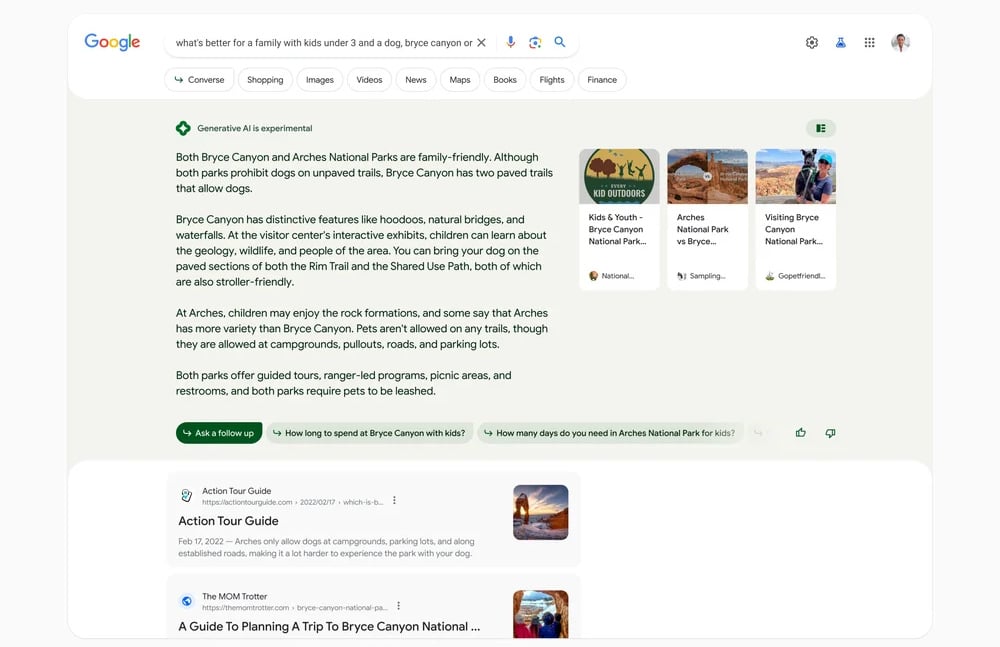

If you’re in the US, you might see a new shaded section at the top of your Google Search results with a summary answering your inquiry, along with links for more information. That section, generated by Google’s generative AI technology, used to appear only if you’ve opted into the Search Generative Experience(SGE) in the Search Labs platform. Now, according to Search Engine Land, Google has started adding the experience on a “subset of queries, on a small percentage of search traffic in the US.” And that is why you could be getting Google’s experimental AI-generated section even if you haven’t switched it on.

All the talk about how much computing power and electricity AI uses, and then Google and Bing just run it for every (most? many? some?) search.

Isn’t it the training of the models which is the most energy intensive? whereas generating some text in answer to a question is probably not super intensive. Caveat: I know nothing

Yes training is the most expensive but it’s still an additional trillion or so floating point operations per generated token of output. That’s not nothing computationally.

Just consider how long it takes GPT4 to answer a question. Anywhere from a few seconds to a minute in my experience. There’s at least one A100 at probably 400w going full throttle that whole time, plus all the supporting hardware.

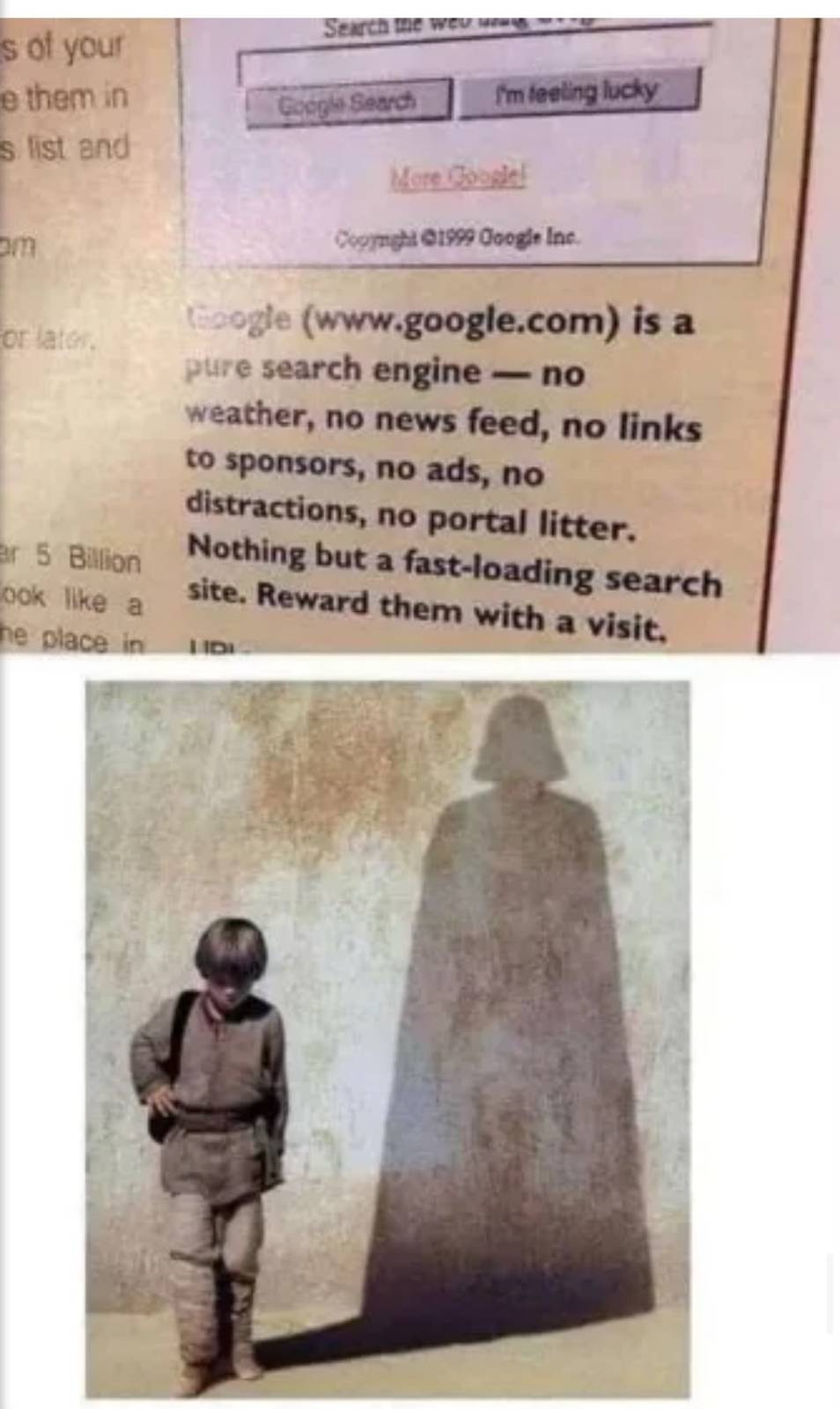

Normal search results are already littered with useless ai generated seo optimized crap. It’s got to the point where sometimes it’s quicker to learn the knowledge you seek the old fashion way: by reading books.

Enshitification must lose.

🤓 Well you see actually we trained all our models off all historical text written by humans so it will be more human and you don’t have to read again

Ive seen akin to this sentiment online and its very baffling

Almost every time I ask a direct question, the two AI answers almost always directly contradict each other. Yesterday I asked if vinegar cuts grease. I received explanations for both why its an excellent grease cutter, and why it doesn’t because it’s an acid.

I think this will be a major issue with AI. Just because it was trained on a huge wealth of knowledge doesn’t mean that it was trained on correct knowledge.

I don’t see any reason being trained on writing informed by correct knowledge would cause it to be correct frequently. unless you’re expecting it to just verbatim lift sentences from training data

No they won’t, because I don’t use their shady as fuck services

Well, you aren’t a Google search user, so you won’t get it. The headline addressed that.

another win for the startpage gang

Technically if you submit a query to the search engine, you do so because you want answer to a question in the best way possible without having to do too much digging.

So does it matter if it uses AI to help you? I say its a great feature.

We’re in the technology sub. People here are old enough to know how to Google (old forums, preferably Reddit, as Lemmy is absent), they don’t know how to use an AI effectively (just look at how they’re trying to justify that). Don’t worry about the downvotes and their nonsense responses. Those are the same people who microwave their water instead of using an electric kettle.

I actually get a kick out of downvote score

As on all social media people here (group)think that they are the smartest. But Lemmy is also a bubble, one with people who don’t want to innovate or experience new things. Very weird for something so tech focused.

No. A lot of times I’m looking to compare many answers. I’ll give you an example.

If I want to look for interesting barbecue rubs that I haven’t tried before I’ll query a search engine. Historically (not so much recently) Google has been better at searching through forums than a direct forum search. So I can check many different sources for the ratios people are using and make my decision.

Google’s half baked AI is really terrible right now. It has a memory of about two answers, barely understands context, and hallucinates more often than both copilot and ChatGPT.

Now I’m looking for a coffee rub and it’s giving me injection advice (happened when I tested Gemini), it gets barbecue styles mixed up, doesn’t follow dietary restrictions that are explicitly stated, and will give you recipes for the wrong cut and type of meat.

It’s not ready, and anyone trusting it for an answer to a question is going to have a bad time. If you have to verify it by checking a bunch of links anyway then it’s not only worthless, it’s making search take longer and take up screen real estate.

Now I’m looking for a coffee rub and it’s giving me injection advice

Wooooo what?

Brisket coffee rub is fairly common. I only know one guy who uses a coffee injection because it’s not common, although other injections are pretty common for brisket.

I have no idea why it went off on that particular tangent. I guess whatever barbecue data it was trained on had a lot of injection advice along with the coffee rubs.

“Start”